Banking & Financial Services

Quality engineering optimizes a DLT platform

Client

A leading provider of financial services digitization solutions

Goal

Reliability assurance for a digital ledger technology (DLT) platform

Tools and Technologies

Kotlin, Java, Http Client, AWS, Azure, GCP, G42, OCP, AKS, EKS, Docker, Kubernetes, Helm Chart, Terraform

Business Challenge

A leader in Blockchain-based digital financial services required assurance for non-GUI (Graphic User Interface), Command Line Interface (CLI), microservices and Representational State Transfer (REST) APIs for a Digital Ledger Technology (DLT) platform, as well as platform reliability assurance on Azure, AWS services (EKS, AKS) to ensure availability, scalability, observability, monitoring and resilience (disaster recovery). It also wanted to identify capacity recommendations and any performance bottlenecks (whether impacting throughput or individual transaction latency) and required comprehensive automation coverage for older and newer product versions and management of frequent deliveries of multiple DLT product versions on a monthly basis.

Solution

- 130+ Dapps were developed and enhanced on the existing automation framework for terminal CLI and cluster utilities

- Quality engineering was streamlined with real-time dashboarding via Grafana and Prometheus

- Coverage for older and newer versions of the DLT platform was automated for smooth, frequent deliverables for confidence in releases

- The test case management tool, Xray, was implemented for transparent automation coverage

- Utilities were developed to execute a testing suite for AKS, EKS, local MAC/ Windows/ Linux cluster environments to run on a daily or as-needed basis

Outcomes

- Automation shortened release cycles from 1x/month to 1x/week; leads testing time was reduced by 80%

- Test automation coverage with 2,000 TCs was developed, with pass rate of 96% in daily runs

- Compatibility was created across AWS-EKS, Azure-AKS, Mac, Windows, Linux and local cluster

- Increased efficiency in deliverables was displayed, along with an annual $350K savings for TCMs

- An average throughput of 25 complete workflows per second was sustained

- Achieved a 95th percentile flow completion time, should not exceed 10 seconds

Our experts can help you find the right solutions to meet your needs.

Home » Services

Productionizing Generative AI Pilots

Get scalable solutions and unlock insights from information siloed across an enterprise by automating data extraction, streamlining workflows, and leveraging models.

Enterprises have vast amounts of unstructured information such as onboarding documents, contracts, financial statements, customer interaction records, confluence pages, etc., with valuable information siloed across formats and systems.

Generative AI is now starting to unlock new capabilities, with vector databases and Large Language Models (LLMs) tapping into unstructured information using natural language, enabling faster insight generation and decision-making. The advent of LLMs, exemplified by the publicly-available ChatGPT, has been a game-changer for information retrieval and contextual question answering. As LLMs evolve, they’re not just limited to text. They’re becoming multi-modal, capable of interpreting charts and images. With a large number of offerings, it is very easy to develop Proofs of Concept (PoCs) and pilot applications. However, to derive meaningful value, the PoCs and pilots need to be productionized and delivered in significant scale.

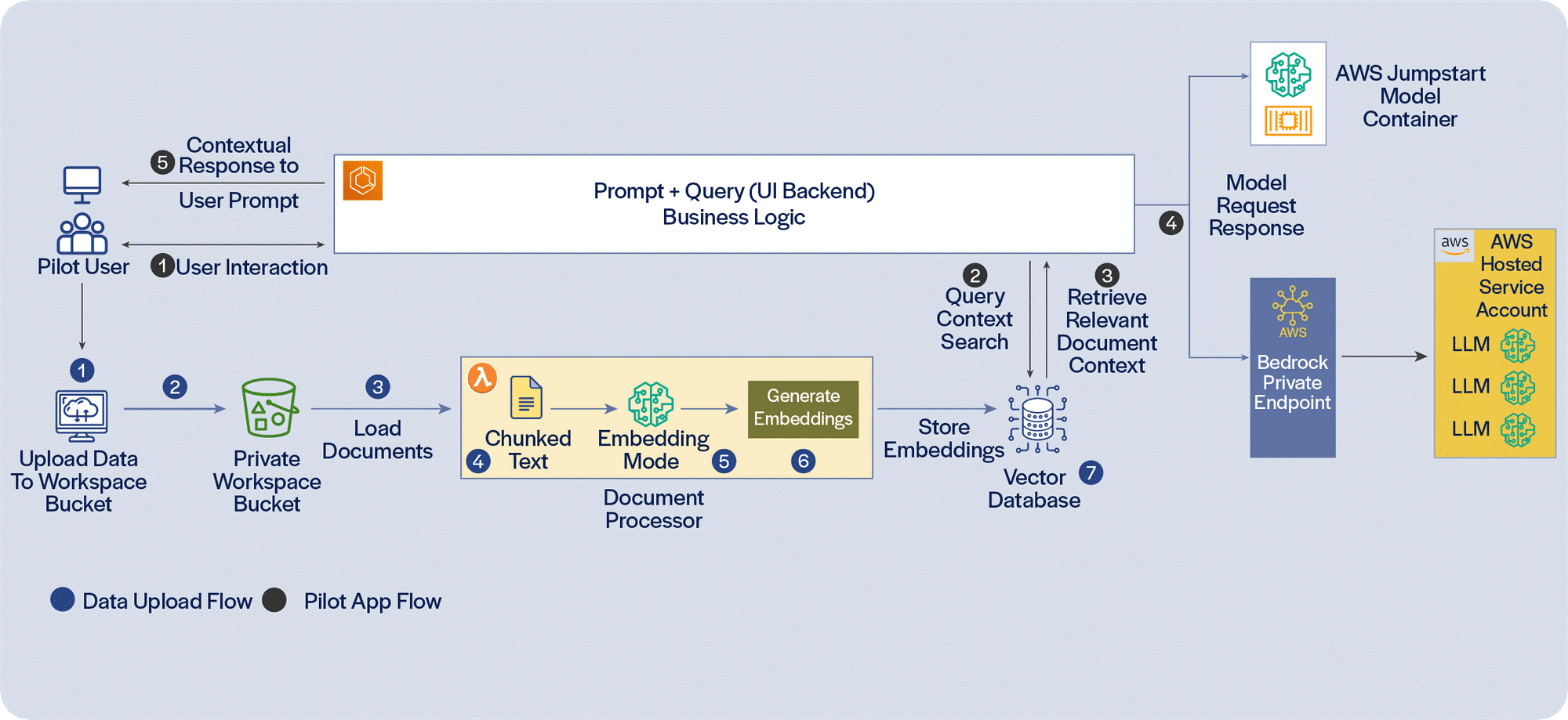

PoCs/pilots deal with only the tip of the iceberg. Productionizing needs to address a lot more that does not readily meet the eye. To scale extraction and indexing information, we need to establish a pipeline that, ideally, would be driven by events, new documents generated and available, possibly through an S3 document store and SQS (Simple Queue Service), to initiate parsing of documents for metadata, chunking, creating vector embedding and persisting metadata and vector embedding to suitable persistence stores. There is a need for logging and exception-handling, notification and automated retries when the pipeline encounters issues.

While developing pilot applications using Generative AI is easy, teams need to carefully work through a number of additional considerations to take these applications to production, scale the volume of documents and the user-base, and deliver full value. It would be easier to do this across multiple RAG (Retrieval-Augmented Generation) applications, utilizing conventional NLP (Natural Language Processing) and classification techniques to direct user requests to different RAG pipelines for different queries. Implementing the capabilities required around productionizing Generative AI applications using LLMs in a phased manner will ensure that value can be scaled as the overall solution architecture and infrastructure is enhanced.

Read our perspective paper for more insights on Productionizing Generative AI Pilots.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchHome » Services

How Gen AI Can Transform Software Engineering

Unlocking efficiency across the software development lifecycle, enabling faster delivery and higher quality outputs.

Generative AI has enormous potential for business use cases, and its application to software engineering is equally promising.

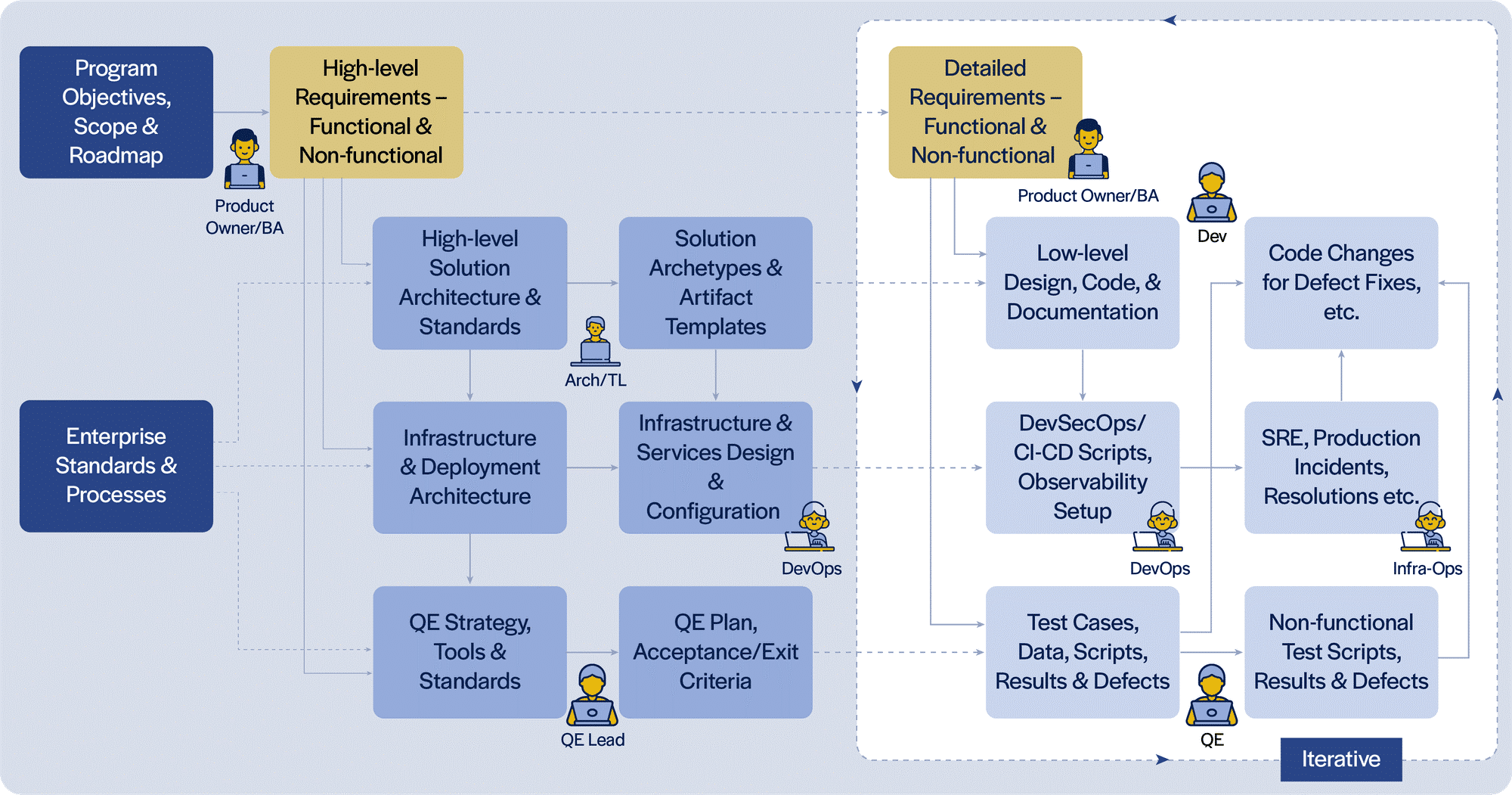

In our experience, development activities, including automated test and deployment scripts, account for only 30-50% of the time and effort spent across the software engineering lifecycle. Within that, only a fraction of the time and effort is spent in actual coding. Hence, to realize the true promise of Generative AI in software engineering, we need to look across the entire lifecycle.

A typical software engineering lifecycle involves a number of different personas (Product Owner, Business Analyst, Architect, Quality Assurance/ Tech Leads, Developer, Quality/ DevSecOps/ Platform Engineers), each using their own tools and producing a distinct set of artifacts. Integrating these different tools through a combination of Gen AI software engineering extensions and services will help streamline the flow of artifacts through the lifecycle, formalize the hand-off reviews, enable automated derivation of initial versions of related artifacts, etc.

As an art-of-the-possible exercise, we developed extensions (for VS Code IDE and Chrome Browser at this time) incorporating the above considerations. Our early experimentation suggests that Generative AI has the potential to enable more complete and consistent artifacts. This results in higher quality, productivity and agility, reducing churn and cycle time, across parts of the software engineering lifecycle that AI coding assistants do not currently address.

Complementary approaches to automate repetitive activities through smart templating, leveraging Generative AI and traditional artifact generation and completion techniques can help save time, let the team focus on higher-value activities and improve overall satisfaction. However, there are key considerations in order to do this at scale across many teams and team members. To enable teams to become high-performant, the Gen AI software engineering extensions and services need to provide capabilities around standardization and templatization of standard solution patterns (archetypes) and formalize the definition and automation of steps of doneness for each artifact type.

Read our perspective paper for more insights on How Gen AI Can Transform Software Engineering through streamlined processes, automated tasks, and augmented collaboration, bringing faster, higher-quality software delivery.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchNC Tech Association Leadership Summit 2024

Iris Software will participate in the exclusive, attendance-capped, annual Leadership Summit hosted by the NC Tech Association, along with its board of directors and advisors, on August 7 & 8, 2024. Our representative, Senior Client Partner, Michel Abranches, will be among the executives gathering for the Summit, at the Pinehurst Resort in Pinehurst, NC, to network and discuss a variety of topics relevant to tech leaders and the projects and associates they manage.

The theme of this year’s summit is Adaptive Leadership. The event includes keynote addresses, executive workshops, and two panel discussions on ‘Why digital transformation is more about people than technology’ and ‘Building resilient tech teams: the power of emotional intelligence.’

As a technology provider to Fortune 500 and other leading global enterprises for more than 30 years, Iris is a trusted choice for leaders who want to realize the full potential of digital transformation. We deliver complex, mission-critical software engineering, application development, and advanced tech solutions that enhance business competitiveness and achieve key outcomes. Our agile, collaborative, right-sized teams and high-trust, high-performance, award-winning culture ensure clients enjoy top value and experience.

Contact Michel Abranches, based in our Charlotte, NC office, or visit www.irissoftware.com for details and success stories about our innovative approach and how we are leveraging the latest in AI / Gen AI / ML, Automation, Cloud, DevOps, Data Science, Enterprise Analytics, Integrations, and Quality Engineering.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchHome » Services

Real world asset tokenization can transform financial markets

Integration with Distributed Ledger Technologies is critical to realizing the full potential of tokenization.

The global financial markets create and deal in multiple asset classes, including equities, bonds, forex, derivatives, and real estate investments. Each of them constitutes a multi-trillion-dollar market. These traditional markets encounter numerous challenges in terms of time and cost which impede accessibility, fund liquidity, and operational efficiencies. Consequently, the expected free flow of capital is hindered, leading to fragmented, and occasionally limited, inclusion of investors.

In response to these challenges, today's financial services industry seeks to explore innovative avenues, leveraging advancements such as Distributed Ledger Technology (DLT). Using DLTs, it is feasible to tokenize assets, thus enabling issuance, trading, servicing and settlement digitally, not just in whole units, but also in fractions.

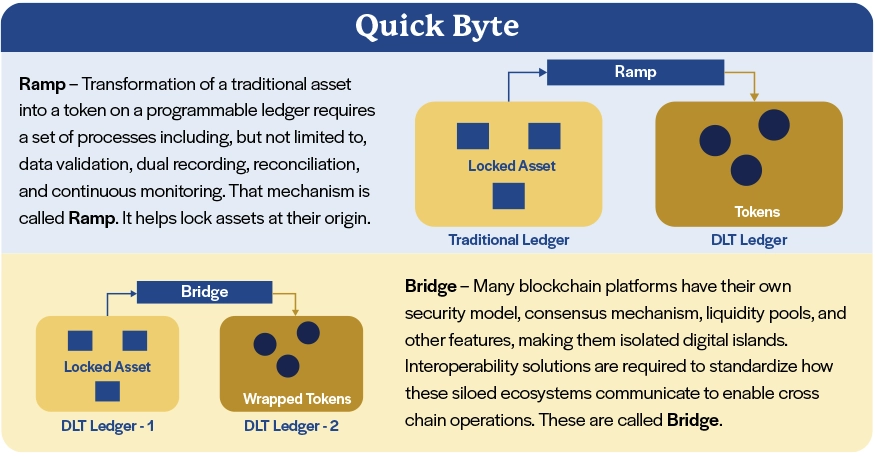

Asset tokenization is the process of converting and portraying the unique properties of a real-world asset, including ownership and rights, on a Distributed Ledger Technology (DLT) platform. Digital and physical real-world assets, such as real estate, stocks, bonds, and commodities, are depicted by tokens with distinctive symbols and cryptographic features. These tokens exhibit specific behavior as part of an executable program on a blockchain.

Many domains, especially financial institutions, have started recognizing the benefits of tokenization and begun to explore this technology. Some of the benefits are fractional ownership, increased liquidity, efficient transfer of ownership, ownership representation and programmability.

With the recent surge in the adoption of tokenization, a diverse array of platforms has emerged, paving the way for broader success, but at the same time creating fragmented islands of ledgers and related assets. As capabilities mature and adoption grows, interconnectivity and interoperability across ledgers representing different institutions issuing/servicing different assets could improve, creating a better integrated market landscape. This would be critical to realizing the promise of asset tokenization using DLT.

Read our Perspective Paper for more insights on asset tokenization and its potential to overcome the challenges, the underlying technology, successful use cases, and issues associated with implementation.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchMeet our team at the AWS Summit in NYC July 2024

Happening at the Jacob Javits Convention Center on the 10th of July, the 2024 AWS Summit New York promises more than 170 sessions on all things cloud and data - from data lake architecture, data governance, data sharing, data engineering and data streaming to machine learning (ML) and ML Ops, data warehouses, business insights and visualization, and data strategy. It also offers interaction with AWS experts, builders, customers, and AWS partners, including Iris Software. All levels of experience – from foundational and intermediate to advanced or expert - can learn and share insights on cloud migration, generative AI, data analytics, as well as industry solutions, challenges and top providers.

An Iris team with extensive and wide-ranging technology and domain experience is attending the Summit. Our professionals are ready and excited to discuss the cloud and data solutions and infrastructure modernization we provide to leading companies across many industries, as well as the advances in emerging tech that we leverage to further aid our clients’ business competitiveness, leadership and digital transformation journeys.

Our Leaders

- Vinodh Arjun, Vice President, Head of Cloud Practice

- Prem Swarup, Vice President, Head of Data & Analytics Practice

- Ravi Chodagam, Vice President, Head of Enterprise Services Sales

Financial Services Client Partners –

Brokerage & Wealth Management; Capital Markets & Investment Banking; Commercial & Corporate Banking; Compliance – Risk & AML; Retail Banking & Payments

Enterprise Services Client Partners -

Insurance, Life Sciences, Manufacturing, Pharmaceutical, Professional Services, Transportation & Logistics

Contact our team to learn more about our innovative approach and advanced technology solutions in AI / ML, Application Development, Automation, Cloud, DevOps, Data Science, Enterprise Analytics, Integrations, and Quality Engineering, which enhance security, scalability, reliability, cost-efficiency, and compliance. Explore how we can add valuable impact to harnessing and monetizing data, optimizing customer experiences, and empowering developers. For more insights, read our Perspective Papers on Cloud Migration Challenges and Solutions and Succeeding in ML Ops Journeys.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchGen AI interface enhances API productivity and UX

Client

Leading logistics services provider

Goal

Improve API functionality and developer team’s productivity and user experience

Tools and Technologies

Open AI (GPT-3.5 & 4 Turbo LLM), AWS Lambda, Streamlit, Python, Apigee

Business Challenge

A leading logistics provider offers an API Developer Portal as a central hub for managing APIs, enabling collaboration, documentation, and integration efforts, but faces limitations, including:

- Challenges to comprehend schemas, necessitating continued reliance on developers

- No means to individually search for API operations on the API Developer Portal

- Difficulties keeping track of changes in newly-released API versions

- Potential week-long delays as business analysts or product owners must engage developers to check if existing APIs can support new website functionalities

Solution

Integrating Gen AI technology with API, we provided a user-friendly chat interface for business users. Features include:

- Conversational interface for API interaction, eliminating the need for technical expertise to interact directly with APIs

- Search mechanism for API operations, query parameters, and request attributes

- Version comparison and tailored response generation

- Backend API execution according to user query needs

Outcomes

- Business users are now empowered with a chat-based interface for querying API details

- Users can seamlessly explore APIs, streamlining collaboration with the API team and reducing onboarding time to one or two days, ultimately enhancing the customer experience for all stakeholders

- Developer productivity improved with the AI-powered tools in the API Developer Portal

- Functionality is enhanced from the version comparison, individual API operation search, and tailored responses

Our experts can help you find the right solutions to meet your needs.

Gen AI summarization solution aids lending app users

Client

Commercial banking unit of a large Canadian bank

Goal

Help lenders access information for complex lending applications on more timely basis and simplify onboarding of new users

Tools and Technologies

PyPDF2, Meta

Business Challenge

As a part of the credit adjudication process for a transaction, commercial bankers use an application to create summaries, memos and rating alerts as needed, which are instrumental for ongoing Capital at Risk (CaR) monitoring, Risk Profiling, Risk Adjusted Return on Capital (RAROC) computations, etc.

There is a significant amount of complexity involved in understanding this application due to the diversity in types of borrowers / loans, nature of collaterals, etc., e.g., How to create a transaction report for my deal? How to update an existing deal?

All of this information is spread across multiple user guides and FAQ documents that may run into hundreds of pages.

Solution

- Ringfenced a knowledge base comprised of the user guides of various functionalities (e.g., facility creation, borrower information, etc.)

- Built a custom-developed, React-based front-end for the conversational assistant to interact with the users

- Parsed, formatted and extracted text chunks from these documents using libraries such as PDF Miner, PyPDF2

- Created vector embeddings using sentence transformer embedding model (all-MiniLM-L6-v2) and stored as indices in the Facebook AI Similarity Search (FAISS) vector database

- Broke down the user query into vector embeddings, searched against the vector database and leveraged local LLM (Llama-2-7B-chat) to generate summarized responses based on the context passed to it by the similarity search

Outcomes

Our custom solution was a conversational agent built using Generative AI, which summarizes relevant information from multiple documents.

It significantly:

- Improved existing users’ ability to access relevant information on a timely basis

- Simplified the migration of bankers and integrations of lending applications resulting from merger or acquisition

Our experts can help you find the right solutions to meet your needs.

Home » Services

Delivering intelligence with speed and scale

Data Science Engineering and Data & ML Ops are key to enable scaling of the intelligence part of the data monetization lifecycle in cloud.

Do you trust your data?

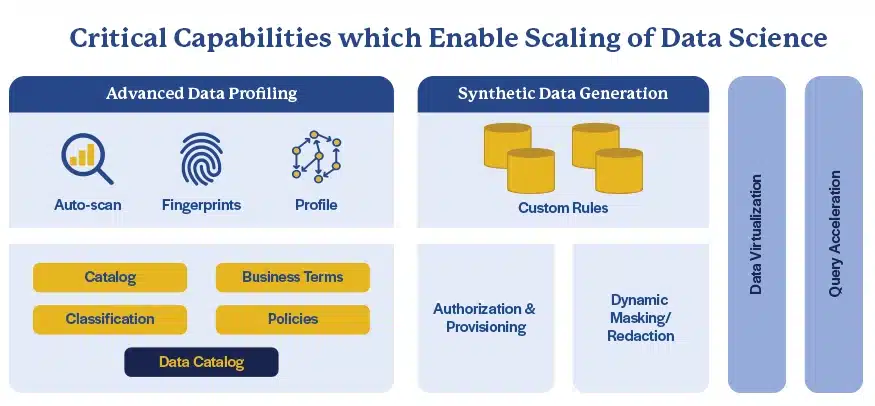

Data driven organizations are ensuring that their Data assets are cataloged and a lineage is established to fully derive value out of their data assets.

Through a number of digital initiatives over the past decade, organizations have collated a lot of information. In addition to structured data, they are collating unstructured and semi-structured formats, e.g., digitized contracts and audio/video of customer interactions. The opportunities to apply established and emerging AI/ML techniques and models to this wide variety of information and derive intelligence and enhanced insights have significantly increased.

Cloud and the evolving technologies around Data Engineering, Data & ML Ops, Data Science, and AI/ML (e.g., Generative AI) offer a significant opportunity to overcome the limitations and deliver intelligence with speed and at scale. While the number and sophistication of AI/ML models available have increased and become easier to deploy, train/tune, and use, they need information at scale to be transformed to features. Delivering intelligence in scale would require more than just data lakes and lake-houses. It also requires the overall ability to support multiple modeling/data science teams working on multiple problems/opportunities concurrently. Data Science Engineering and Data & ML Ops are key to enable scaling of the intelligence part of the data monetization lifecycle. Teams need to understand data science/modeling lifecycles to effectively scale intelligence.

In conclusion, organizations demand intelligence in scale and at speed. Emerging technologies like Generative AI demand more powerful infrastructure (e.g., GPU farms). Cloud technologies and services enable these. With support for Python across the intelligence lifecycle, it has become easier to bring together data engineering and data science teams that are easier to provision and use in cloud.

To know more about the benefits, challenges, and best practices for scaling various stages of deriving intelligence from data on cloud environments, read the perspective paper here.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchHome » Services

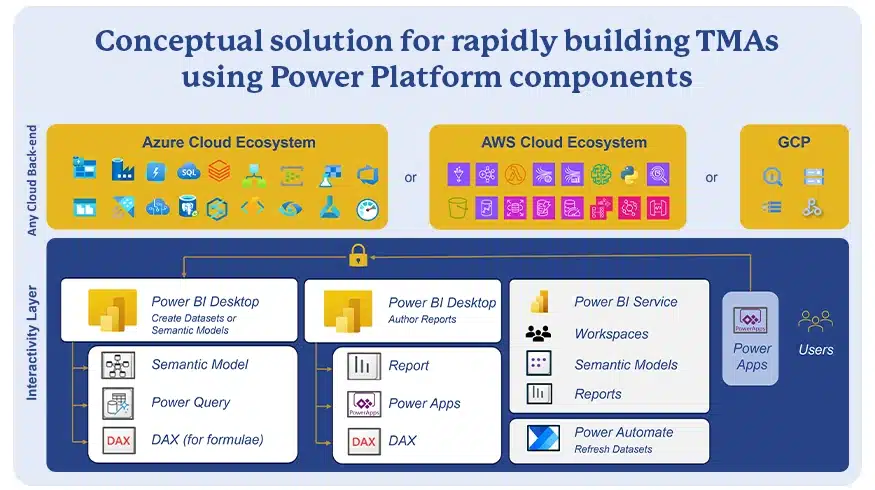

How Low-code Empowers Mission-critical End Users

Low-code platforms enable rapid conversions to technology-managed applications that provide end users with rich interfaces, powerful configurations, easy integrations, and enhanced controls.

Do you trust your data?

Data driven organizations are ensuring that their Data assets are cataloged and a lineage is established to fully derive value out of their data assets.

Many large and small enterprises utilize business-managed applications (BMAs) in their value chain to supplement technology-managed applications (TMAs). BMAs are applications or software that end users create or procure off-the-shelf and implement on their own; these typically are low-code or no-code software applications. Such BMAs offer the ability to automate or augment team-specific processes or information to enable enterprise-critical decision-making.

Technology teams build and manage TMAs to do a lot of heavy lifting by enabling business unit workflows and transactions and automating manual processes. TMAs are often the source systems for analytics and intelligence engines that drive off data warehouses, marts, lakes, lake-houses, etc. BMAs dominate the last mile in how these data infrastructures support critical reporting and decision making.

While BMAs deliver value and simplify complex processes, they bring with them a large set of challenges in security, opacity, controls collaboration, traceability and audit. Therefore, on an ongoing basis, business-critical BMAs that have become relatively mature in their capabilities must be industrialized with optimal time and investment. Low-code platforms provide the right blend of ease of development, flexibility and governance that enables the rapid conversion of BMAs to TMAs with predictable timelines and low-cost, high-quality output.

Read our Perspective Paper for more insights on using low-code platforms to convert BMAs to TMAs that provide end users with rich interfaces, powerful configurations, easy integrations, and enhanced controls.

Contact

Our experts can help you find the right solutions to meet your needs.

Get in touchCompany